For Developers

Production Stack for Secure AI Builders

Everything you need to ship AI to production securely — Gateway, Observability, Guardrails, Governance, and Policy Management, all in one platform.

Open source · Self-hostable · Enterprise-ready

10B+

tokens processed daily

1600+

LLMs supported

99.99%

uptime SLA

<3 min

integration time

Why Prompt Shields for Developers

Ship secure AI faster, without compromise

Stop choosing between speed and security. Our platform gives you both with enterprise-grade protection that integrates in minutes.

60% Faster Time-to-Market

Pre-built security guardrails and unified APIs eliminate weeks of custom integration work, so you can focus on features.

- 3-line SDK integration

- Pre-configured policies

- Zero infrastructure changes

Enterprise-Grade Security

Built-in protection against prompt injection, data leakage, and adversarial attacks — validated by leading security teams.

- Real-time threat detection

- PII redaction & compliance

- Full audit trail

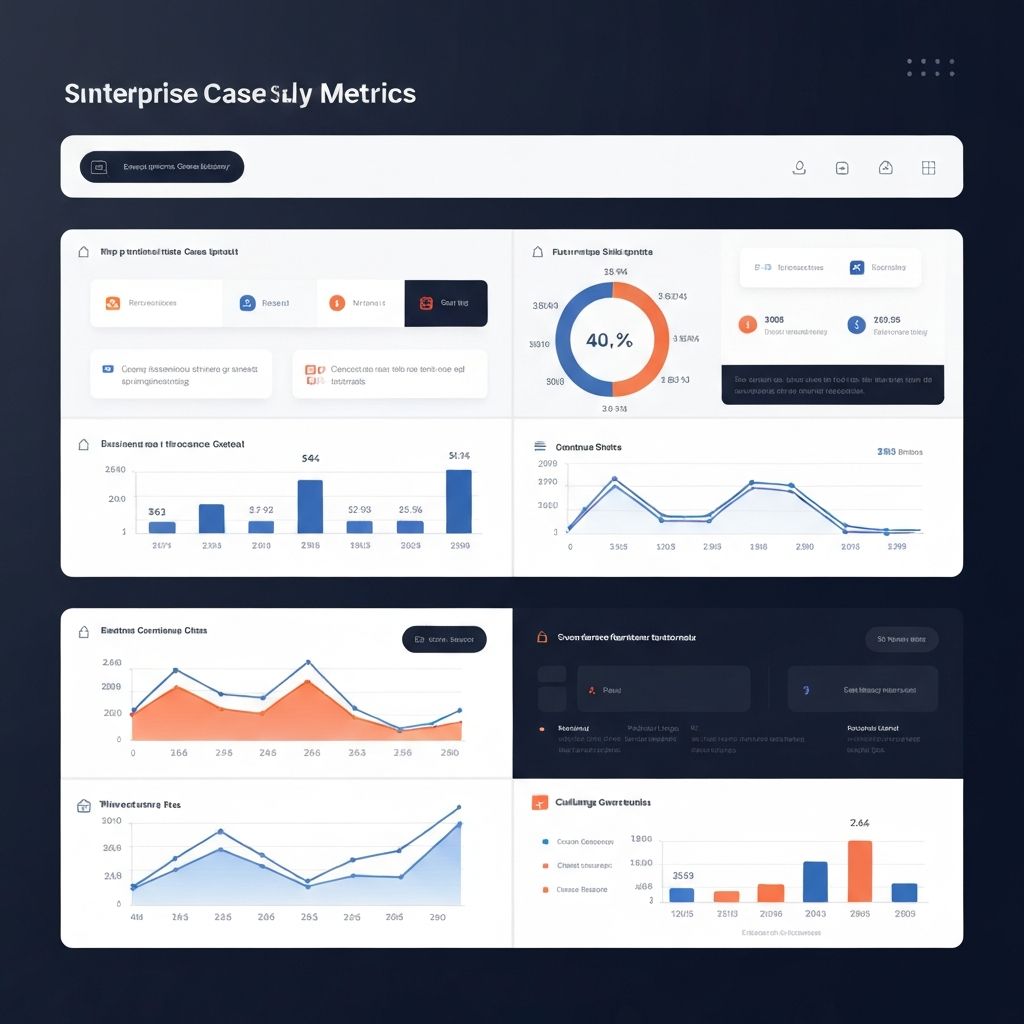

40% Cost Reduction

Intelligent caching, smart routing, and usage analytics help you optimize every token and reduce LLM expenses significantly.

- Semantic caching

- Dynamic model routing

- Real-time cost monitoring

How It Works

From integration to production in 3 steps

Integrate SDK

Add our lightweight SDK to your codebase with a single import. Works with any LLM provider.

Configure Policies

Set up guardrails, routing rules, and security policies through our intuitive dashboard.

Monitor & Scale

Deploy with confidence. Track performance, costs, and security events in real-time.

The Difference

Building AI without vs. with Prompt Shields

Without Prompt Shields

- ✗Weeks of custom security implementation

- ✗Managing multiple provider SDKs

- ✗No visibility into LLM behavior

- ✗Compliance concerns and audit gaps

With Prompt Shields

- Security built-in from day one

- Single unified API for 1600+ models

- Real-time observability & alerts

- Complete audit trail for compliance

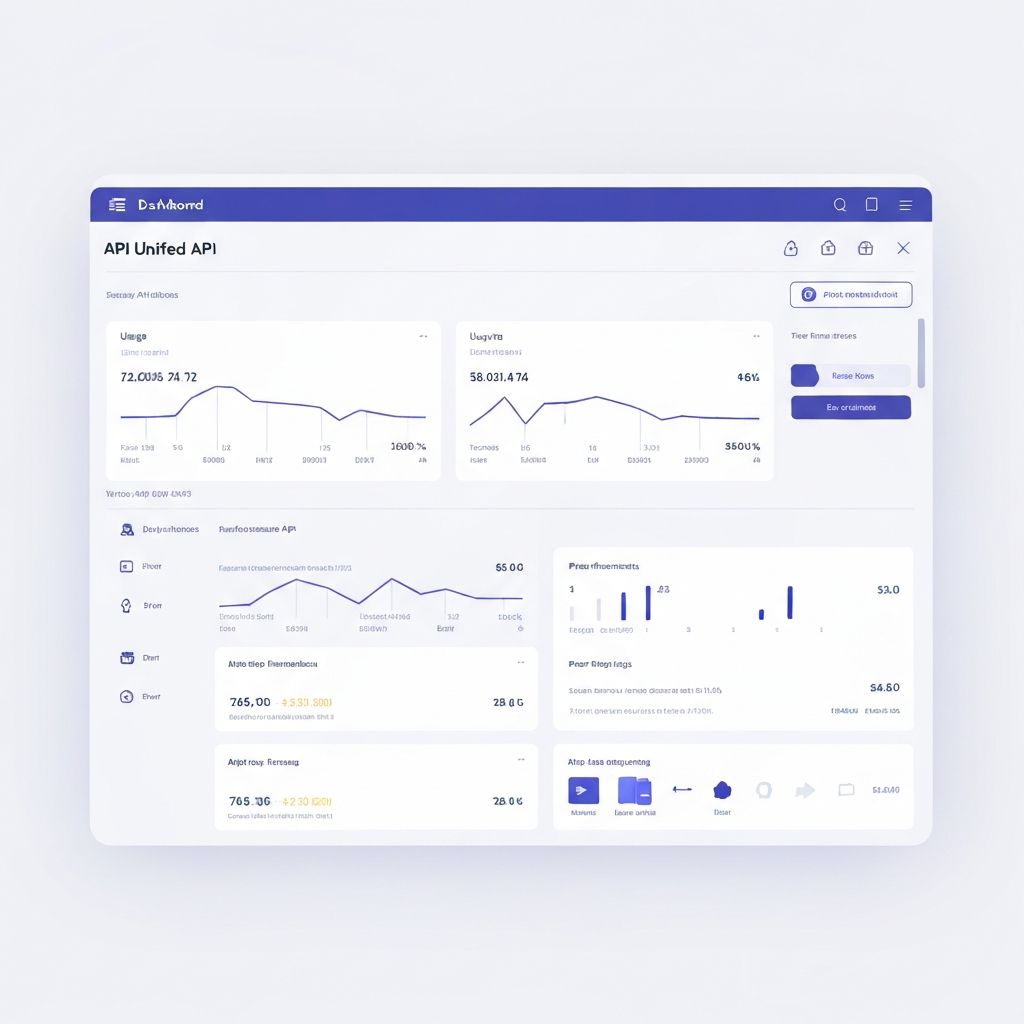

End-to-end LLM Orchestration

Build · Monitor · Scale

Stop wasting time integrating models

Access 1,600+ LLMs via a unified API, so you can focus on building, not managing provider SDKs.

Eliminate the guesswork

Monitor LLM behavior, catch anomalies early, and manage usage proactively with real-time observability dashboards.

Keep AI outputs in check

Apply guardrails to prevent harmful outputs, enforce content policies, and ensure compliance at the network level.

No need to hard-code prompts

Manage prompts centrally with version control, A/B testing, and collaborative workflows for your entire team.

Production-ready agent workflows

Deploy and govern AI agents with centralized authentication, access control, and full observability.

Unified Access

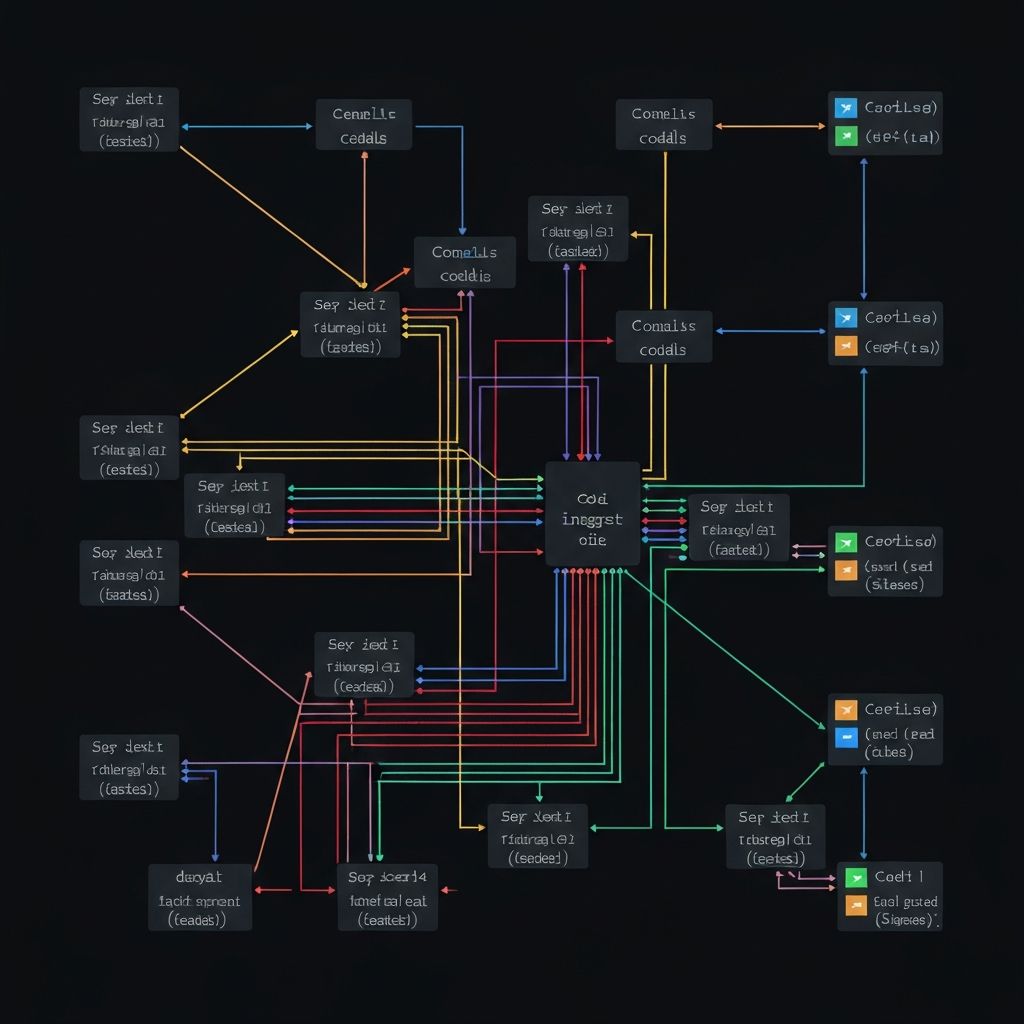

Introducing the AI Gateway Pattern

Connect, manage, and secure AI interactions across all providers with centralized control, real-time monitoring, and enterprise-grade governance.

Access any model via unified API

Connect to 1600+ LLMs and providers across different modalities — no separate integration required.

Smart routing and failover

Dynamically switch between models, distribute workloads, and ensure uptime with configurable routing rules.

Optimize costs with caching

Reduce latency and save costs with simple and semantic caching for repeat requests.

Secure key management

Store LLM keys safely in a vault and manage access with virtual keys. Rotate, revoke, and monitor usage with full control.

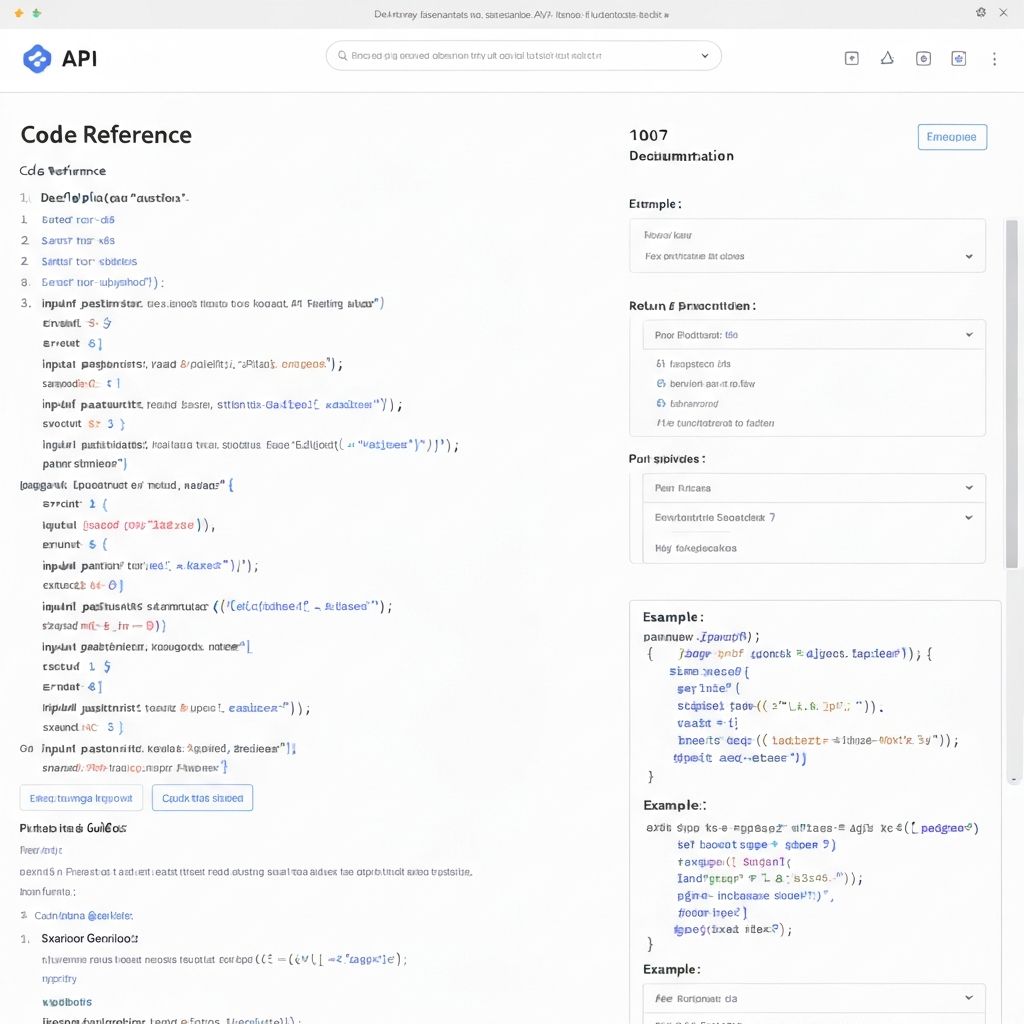

Plug & Play

Integrate in a minute

Add security and observability to your AI stack in just 3 lines of code — no changes to your existing infrastructure.

import { PromptShields } from "@prompt-shields/sdk";

const gateway = new PromptShields({

apiKey: process.env.PROMPT_SHIELDS_KEY

});

async function main() {

const completion = await gateway.chat.completions.create({

messages: [{ role: "user", content: "Hello!" }],

model: "gpt-4",

// Security policies applied automatically

});

console.log(completion.choices[0]);

}

main();Go from pilot to production faster

Enterprise-ready AI Gateway for reliable, secure, and fast deployment.

Conditional Routing

Route to providers as per custom conditions

Multimodal by design

Supports vision, audio, and image generation

Fallbacks

Switch between LLMs during failures or errors

Automatic retries

Rescue failed requests with auto-retries

Load balancing

Distribute network traffic across LLMs

Request timeouts

Terminate requests to handle errors gracefully

Canary testing

Test new models and prompts without impact

Real-time observability

Track costs and guardrail violations

Network guardrails

Apply policies at the gateway level

Resources

Learn more about AI security

Documentation

API Reference & Guides

Comprehensive documentation for integrating Prompt Shields into your applications.

Read docs

Case Study

Enterprise Deployments

See how leading companies ship secure AI with Prompt Shields.

View case studies

Guide

AI Security Best Practices

Industry standards and frameworks for securing AI applications.

Learn moreReady to ship secure AI?

Join hundreds of developers building production-ready AI applications with enterprise-grade security.